Artificial intelligence (AI) is rapidly transforming the financial industry—offering both unprecedented opportunities and daunting new risks. The BSA Coalition’s recent AI and Fraud webinar brought together leading voices in technology, compliance, and risk management to address the double-edged nature of AI: as a tool for both fraudsters and fraud fighters.

The Rising Tide of AI-Driven Fraud

AI is no longer a futuristic concept. It is already being weaponized by bad actors, with global fraud losses estimated at up to 5% of company revenues. Fraudsters now have access to low-cost, easy-to-use generative AI tools that allow them to:

- Fabricate highly realistic documents (IDs, passports, driver’s licenses)

- Clone voices and create deepfake videos

- Generate synthetic emails and text messages that mimic real individuals and corporate communication styles

These tools are widely available, giving criminals a professional edge without requiring professional resources. The result: a surge in sophisticated fraud schemes that can easily bypass traditional verification methods.

Regulatory Spotlight: FinCEN’s November 2024 Advisory

The U.S. Treasury’s Financial Crimes Enforcement Network (FinCEN) recently issued a pivotal advisory highlighting the explosion of deepfake and synthetic ID fraud. Key points include:

- Generative AI is being used to create fraudulent identification and manipulate financial documents.

- AI-generated content is increasingly used in phishing, spear-phishing, and “whaling” attacks (targeting high-level executives).

- The cost and complexity of perpetrating fraud have dropped dramatically, making it easier for criminals to deceive financial institutions and consumers alike.

Real-World Vulnerabilities: Where Institutions Are at Risk

The webinar brought to light the very real and immediate ways AI-driven fraud is affecting financial institutions today. Panelists described how fraudsters are leveraging advanced technologies to bypass traditional safeguards and exploit new vulnerabilities across the industry. Some examples covered include:

- Fraudulent loan documents that pass both visual inspection and OCR (optical character recognition) tests

- Voice cloning attacks impersonating executives to authorize wire transfers

- Deepfake emails and texts that trigger urgent, fraudulent actions

- Use of corporate video tools and social media posts to harvest executive voices and images for impersonation

Public-facing executives are prime targets for AI-driven impersonation. Institutions should educate leaders about the risks of sharing audio and video online, and consider monitoring for unauthorized use of executive likenesses in digital channels.

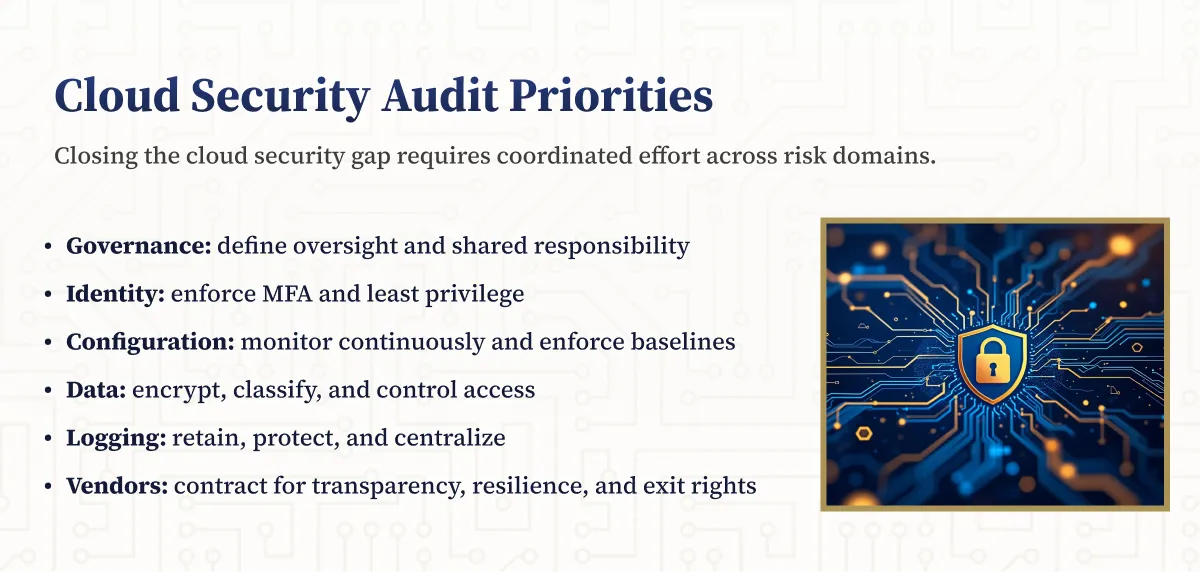

Governance Gaps: The Need for Clear AI Policies

A recurring theme from the discussion: confusion and inconsistency around AI governance. Many employees use AI tools without informing leadership, often because there is no clear policy or definition of what constitutes “AI” within the organization. This lack of governance makes it difficult to set effective controls and leaves institutions exposed.

Effective AI risk management requires coordination between compliance, fraud, IT, vendor management, and business units. Regular cross-departmental reviews ensure that policies are comprehensive, controls are effective, and emerging risks are quickly identified and addressed.

Actionable Steps for Financial Institutions to Protect Against AI Threats

1. Define and Enforce AI Governance

- Establish clear definitions of AI, its approved uses, and data inputs.

- Develop and communicate usage policies across all departments—from underwriting to IT risk.

- Foster cross-departmental collaboration to ensure policies are comprehensive and risks are monitored from multiple perspectives.

2. Leverage AI for Detection and Defense

- Deploy AI tools for fraud detection, such as:

- Computer vision to spot duplicate images in appraisals

- Natural language processing (NLP) and OCR to validate income and deposit documents

- AI-generated content detection software to flag synthetic IDs and communications

- Reverse image lookup to uncover reused or AI-created photos

- Automate wherever possible to keep pace with the speed and scale of AI-driven fraud.

3. Reassess and Update Cybersecurity Policies

- Review cyber insurance policies for specific AI fraud clauses.

- Update incident response plans to address AI-related breaches—not just traditional data theft.

- Monitor for fraud breaches that impact trust and credibility, even if no data is stolen.

4. Keep a Human in the Loop

- AI is not a “set it and forget it” solution. Human oversight is essential to:

- Detect hallucinations and inconsistent outputs

- Prevent false positives or missed fraud cases

- Continuously tune and audit AI models

- Invest in employee training to make staff your first line of defense.

- Emphasize the importance of independent verification for high-risk or urgent requests, especially those appearing to come from senior leaders.

- Regularly update training to address new AI-driven social engineering tactics, such as urgent requests or authority-based manipulation.

5. Manage Executive Exposure

- Educate executives about the risks of sharing audio and video online.

- Monitor for unauthorized use of executive likenesses in digital channels.

6. Stay Agile and Proactive

- Establish regular policy reviews and updates.

- Incorporate lessons learned from incidents and stay informed about regulatory and industry developments.

- Recognize that static policies are quickly outpaced by evolving AI threats.

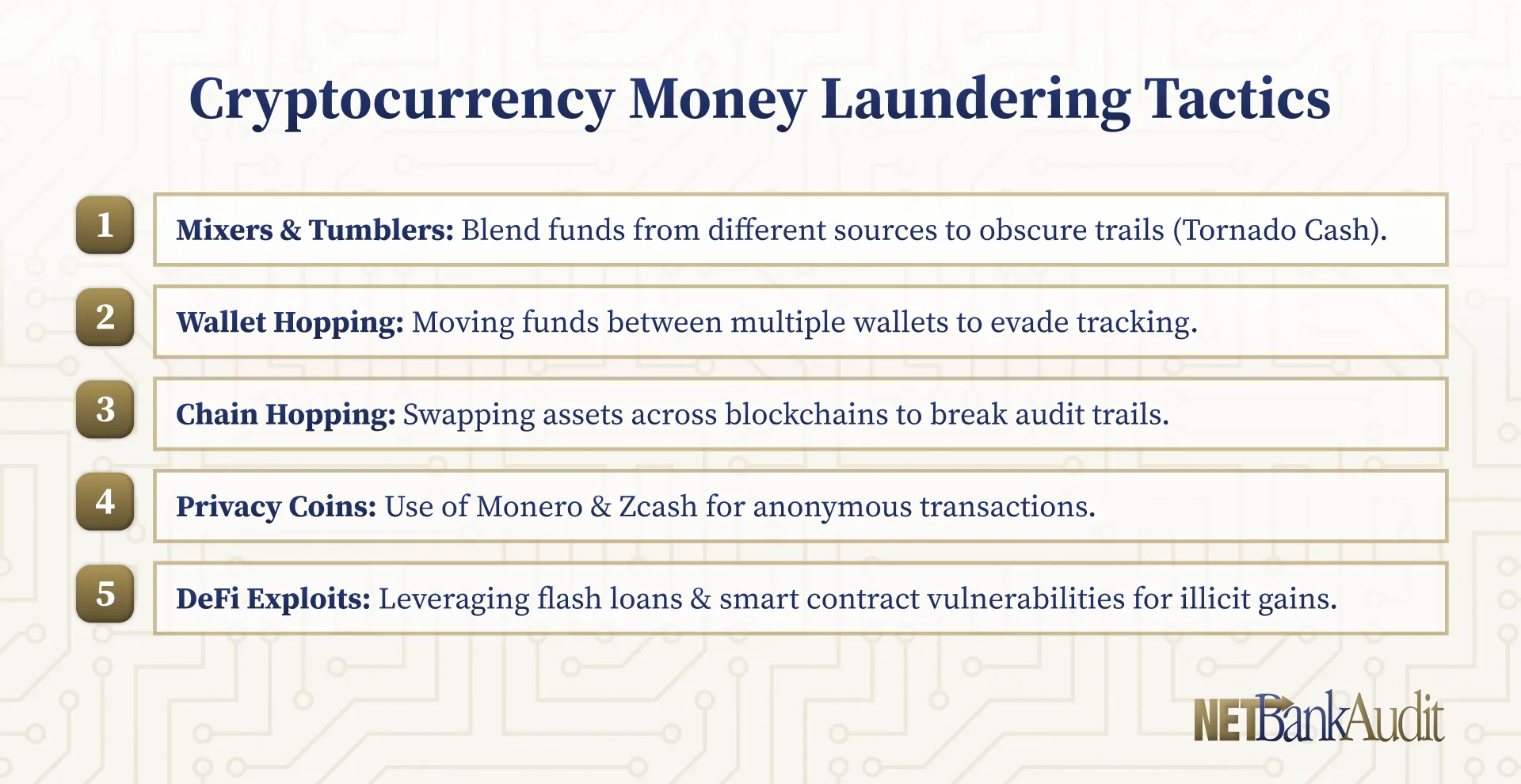

Emerging Threats and Opportunities

- AI vs. AI: The fraud landscape is now a battle of machine against machine. As fraudsters train AI to deceive, institutions must train AI to detect and stop it.

- Whaling Attacks: Advanced phishing campaigns now target executives with AI-generated emails, voice, and video, exploiting urgency and authority.

- Synthetic Deposits: AI is being used to simulate realistic bank activity, not just forged checks but patterned transactions that mimic legitimate behavior.

- Policy Lag: Many organizations are developing AI policies reactively. The most proactive institutions are aligning policy with training, technology, and fraud pattern recognition.

- Cultural Shift: The idea that AI will replace staff is a misconception. Instead, AI should empower employees—automating repetitive tasks and equipping them to catch more sophisticated fraud.

Partner with NETBankAudit to Stay Ahead of the AI Curve

The BSA Coalition webinar made one thing clear: AI is already reshaping how fraud is committed—and how it must be prevented.

Protecting your institution from AI-driven fraud requires more than just technology, it demands deep regulatory expertise and a thorough understanding of the unique risks facing community financial institutions.

NETBankAudit provides GLBA and FFIEC audits and assessments, combining regulatory insight with technical proficiency to deliver truly comprehensive risk evaluations with actionable value-add insights. Our team consists of full-time, certified professionals with decades of real-world experience in both security engineering and financial institution compliance, ensuring you receive examiner-aligned, independent guidance.

If your institution is looking to assess AI risks, enhance cybersecurity controls, or prepare for regulatory review, contact NETBankAudit today to put our expertise to work for you.

%201%20(1).svg)

%201.svg)

.avif)

.svg)

.webp)

.webp)

.webp)

.png)

.webp)

.webp)

.webp)

.webp)

%201.webp)

.webp)

%20(3).webp)

.webp)

%20Works.webp)

.webp)

.webp)

%20(1).webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

%201.svg)