Artificial Intelligence (AI) is no longer a distant concept for financial institutions. It is now a core part of daily operations, risk management, and customer engagement. As AI’s influence grows, so do the opportunities and challenges it brings to banks, credit unions, and other financial entities. This article provides a foundational understanding of AI as it relates to financial institutions, explores its benefits and risks, and offers practical guidance for compliance professionals seeking to navigate this evolving landscape.

NETBankAudit experts have over 25 years of experience in AI risk assessment, regulatory compliance, and IT audits for financial institutions. If you have any questions after reading this guide, please reach out to our team.

Check out the BSA Coalition webinar on AI Fraud with great insights on protecting your institution.

What is Artificial Intelligence?

What is Artificial Intelligence?

Artificial Intelligence (AI) has become one of the most popular topics in today’s technology space. It is also the subject of much debate as it presents amazing potential for advancements that can be used for commercial and social improvements, while at the same time facilitating criminal and terrorist activity. AI is already in widespread use and facilitates many of our daily activities. According to Chat GPT, the language model chatbot that was developed by OpenAI and is itself a form of AI, the definition of artificial intelligence is as follows: Artificial Intelligence (AI) is a branch of computer science focused on creating systems or machines that can perform tasks that normally require human intelligence. These tasks include learning from experience or data (e.g., Netflix recommends shows), understanding language (e.g., when Siri or ChatGPT answers you), recognizing images or sounds (e.g., facial recognition in phones), making decisions (e.g., self-driving cars choosing when to stop or turn), and problem-solving (e.g., playing chess or optimizing delivery routes). There are different types of AI, ranging from narrow AI (which does one task really well, like voice assistants) to general AI (which is designed to think and reason like a human).

Origin and History of Artificial Intelligence

Interestingly, the concept of artificial intelligence is mentioned in early Greek and Chinese mythology, but its roots are in the 1950s. Specifically, in 1956, a Dartmouth computer scientist, John McCarthy, coined the term “artificial intelligence.” He and others believed that human-level intelligence could be simulated by computers and this marked the formal start of AI research. Between 1960 – 1970, researchers built rule-based programs like ELIZA (a chatbot mimicking a therapist) and SHRDLU (an early natural language understanding computer program that allowed users to interact with a virtual "blocks world"). However, significant progress did not occur until the 1980s and 1990s when expert systems and smart algorithms were introduced. A famous example of early use is the victory of the IBM computer Deep Blue over chess champion Gary Kasparov in 1997. The next twenty years brought continued advancements with the widespread use of the Internet and advancements in machine learning with more computing power and larger datasets. Automated learning was initiated and neural networks evolved into deep neural networks which powered breakthroughs in image recognition, language, and game-playing. AI has since entered everyday life with the introduction of Siri, Alexa, Google Translate, self-driving cars, and facial recognition.

Defining AI in the Financial Sector

AI refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include learning from data, recognizing patterns, understanding language, making decisions, and solving problems. In the context of financial institutions, AI powers everything from automated loan underwriting to real-time fraud detection and customer service chatbots.

How AI Has Evolved in Banking

The journey of AI in finance began with simple rule-based systems and has rapidly advanced to sophisticated machine learning models and natural language processing tools. Today, AI is embedded in core banking systems, digital channels, and risk management frameworks. Its evolution has been driven by increased computing power, the availability of large datasets, and the need for faster, more accurate decision-making.

Artificial Intelligence Use by Financial Institutions

AI is transforming how financial institutions operate and serve their customers. By automating routine processes and providing data-driven insights, AI enables banks and credit unions to deliver faster, more personalized services while reducing operational costs.

- Fraud Detection and Risk Management: AI models analyze vast amounts of transaction data in real time, identifying unusual patterns and potential fraud with greater accuracy than traditional methods.

- Customer Service Automation: AI-powered chatbots and virtual assistants handle routine inquiries 24/7, improving response times and freeing up staff for more complex tasks.

- Credit Scoring and Underwriting: By leveraging alternative data sources, AI can assess creditworthiness more accurately and inclusively, expanding access to credit for underserved populations.

- Algorithmic Trading and Portfolio Management: AI systems process market data at high speed, enabling more informed investment decisions and efficient trade execution.

- Personalized Financial Services: AI tailors product recommendations and financial advice to individual customer profiles, enhancing engagement and satisfaction.

- Operational Efficiency: Automating back-office tasks such as document processing and data entry reduces manual workload and operational costs.

AI Risks and Threats: What Compliance Professionals Need to Know

Emerging Threats from AI-Driven Fraud

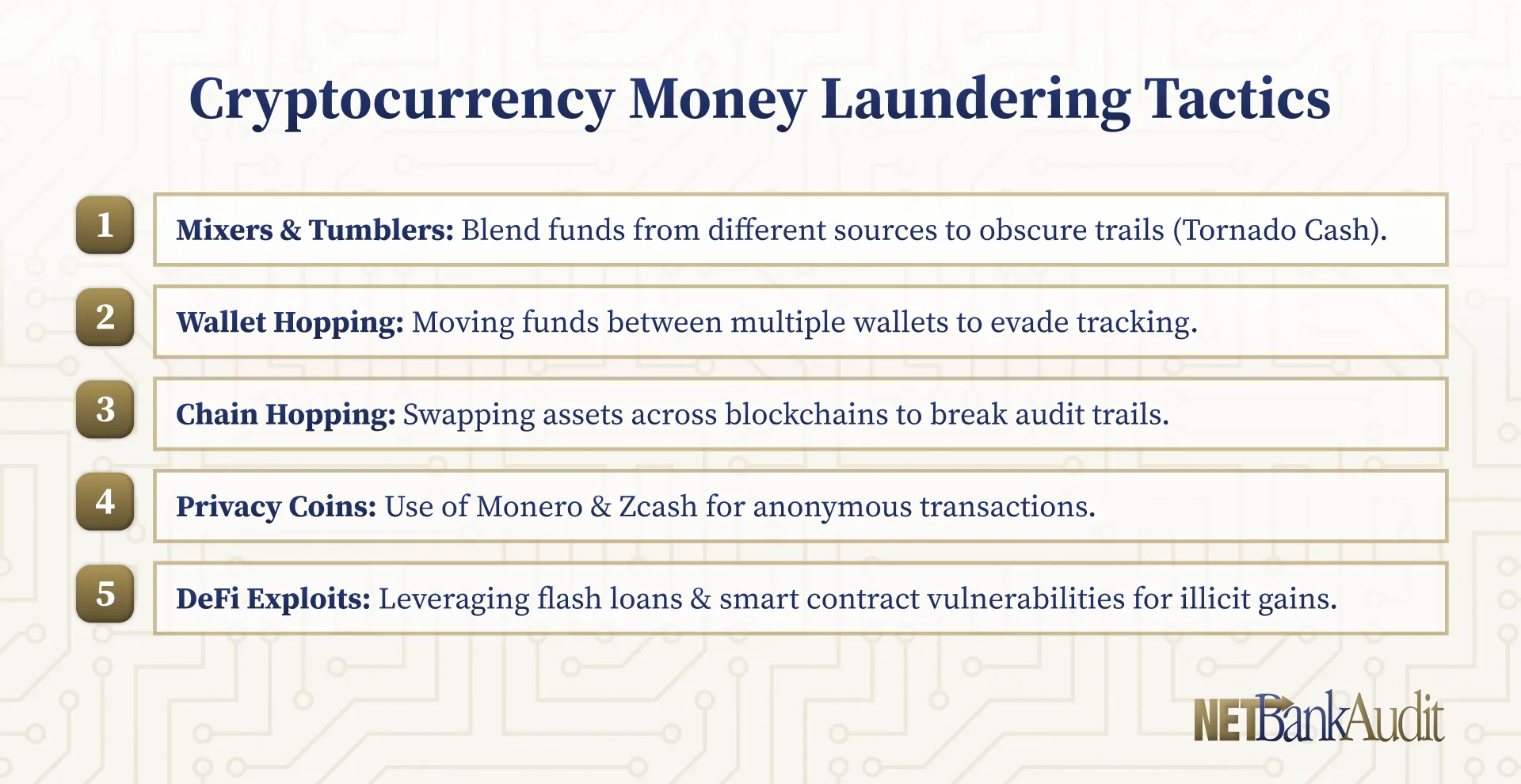

While AI offers significant benefits, it also introduces new risks. Criminals are increasingly using AI to perpetrate sophisticated fraud schemes, including deepfake-based social engineering and AI-driven phishing attacks. These threats are evolving rapidly, making it essential for financial institutions to stay vigilant and proactive.

For a deep dive into the latest AI-driven fraud tactics and regulatory responses, see our Insights From BSA Coalition’s AI and Fraud Webinar.

Key Risk Areas for Financial Institutions

AI’s complexity and reliance on large datasets create unique vulnerabilities. Compliance professionals should be aware of the following risk categories:

- Fraud and Cyberattacks: AI can be weaponized to launch more sophisticated attacks, such as deepfake impersonations and automated phishing campaigns.

- Model Risk: Poorly trained or biased AI models can lead to flawed decisions in lending, trading, or risk assessment.

- Data Privacy Violations: The use of personal and financial data in AI systems increases the risk of privacy breaches and regulatory penalties.

- Regulatory and Compliance Risks: Rapid AI innovation can outpace regulatory frameworks, leading to potential compliance gaps.

- Operational Vulnerabilities: Overreliance on AI may reduce human oversight, creating blind spots in risk management.

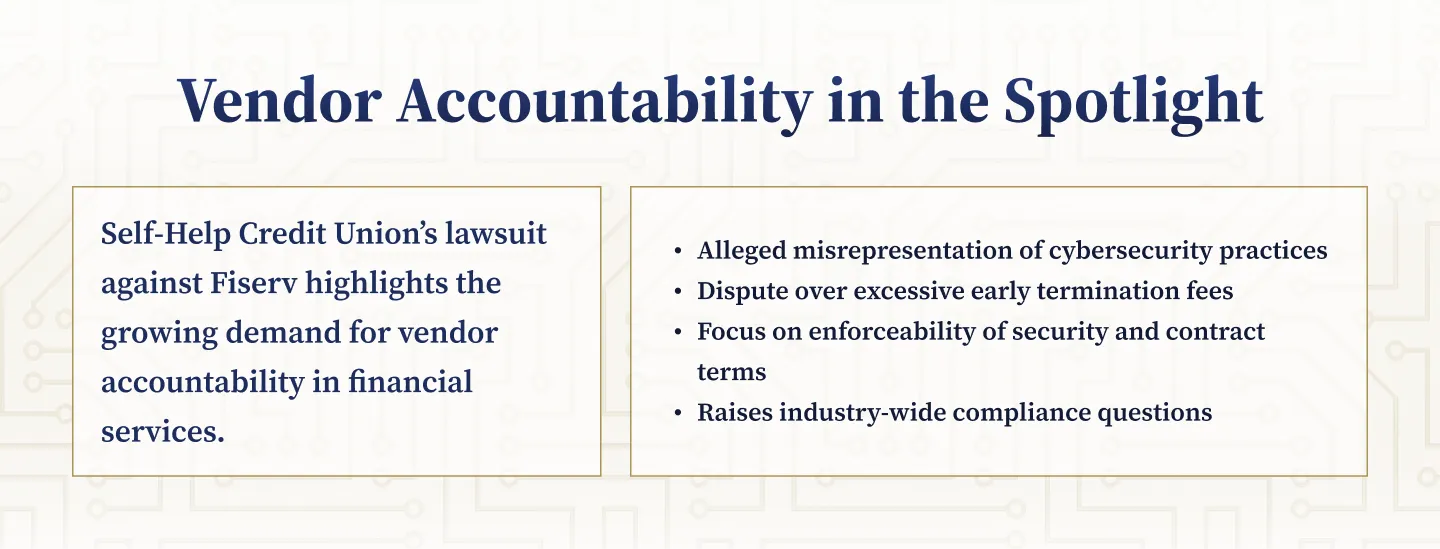

- Third-Party and Vendor Risks: Outsourcing AI solutions introduces additional risks if vendors are compromised or lack robust controls.

- Legal Risks: AI-driven automation in legal and compliance processes must be carefully managed to avoid litigation and regulatory exposure.

%201%20(1).svg)

%201.svg)

THE GOLD STANDARD INCybersecurity and Regulatory Compliance

AI Governance: Building a Foundation for Responsible Use

Why AI Governance Matters

Effective AI governance is essential for managing risks and ensuring that AI is used ethically and responsibly. Many financial institutions face challenges in defining what constitutes “AI” and establishing clear policies for its use. Without robust governance, institutions may struggle to set effective controls and remain compliant with evolving regulations.

Core Elements of an AI Governance Framework

A well-structured AI governance program should address the following areas:

- Clear Definitions and Policies: Establish what qualifies as AI within the organization and set guidelines for its approved uses.

- Cross-Departmental Collaboration: Involve compliance, IT, risk management, and business units in policy development and oversight.

- Ethical Principles: Ensure fairness, transparency, and privacy in AI applications.

- Ongoing Training and Awareness: Educate staff on AI risks, responsible use, and emerging threats.

- Regular Policy Reviews: Update governance frameworks to reflect new technologies, regulatory changes, and lessons learned from incidents.

Strategies for Managing AI Risks in Financial Institutions

The impact of artificial intelligence on each financial institution will vary depending on their circumstances and the relevance of the various opportunities and threats discussed above. However, it is important for all institutions to understand their current position and the impact of artificial intelligence on their business operations. Specifically, an assessment of how the institution is directly or indirectly using artificial intelligence should be performed to ensure a full understanding of the potential future opportunities and the related risks. The following next steps are recommended to ensure that your institution is positioned to leverage AI technology appropriately and is also prepared for the external AI threat environment.

Assessing Your Institution’s AI Landscape

Understanding how AI is currently used, both directly and indirectly, is the first step in managing its risks and opportunities. Institutions should conduct a thorough assessment of AI’s impact on core business areas, including lending, fraud detection, and customer service.

Key Steps for AI Risk Assessment

- Identify all AI applications and data sources in use.

- Analyze both the potential benefits and risk exposures.

- Evaluate third-party AI vendors and their controls.

Develop and Enforce Clear AI Policies

Institutions should establish clear, organization-wide policies for AI use. These policies should address ethical considerations, data privacy, and regulatory compliance. FI's should define governance structure for AI oversight, providing regular training to ensure that all employees understand and adhere to these policies.

Invest in Talent and Technology

Building internal expertise in AI, data science, and machine learning is essential for effective oversight. Institutions should also invest in technology infrastructure that supports secure and compliant AI development and deployment.

Prioritize Use Cases and Piloting Solutions

Focus on high-impact, low-risk AI applications first. Pilot new solutions before full-scale rollout to identify and address potential issues early.

Strengthen Data Governance Foundations

High-quality, secure, and compliant data is the foundation of effective AI. Institutions should improve data governance and integration across the organization to support reliable AI outcomes.

Continuously Monitor and Mitigate Risk

AI risks are dynamic and require ongoing monitoring. Implement systems to detect model bias, drift, and cybersecurity threats, and establish internal audit processes to review AI performance and compliance.

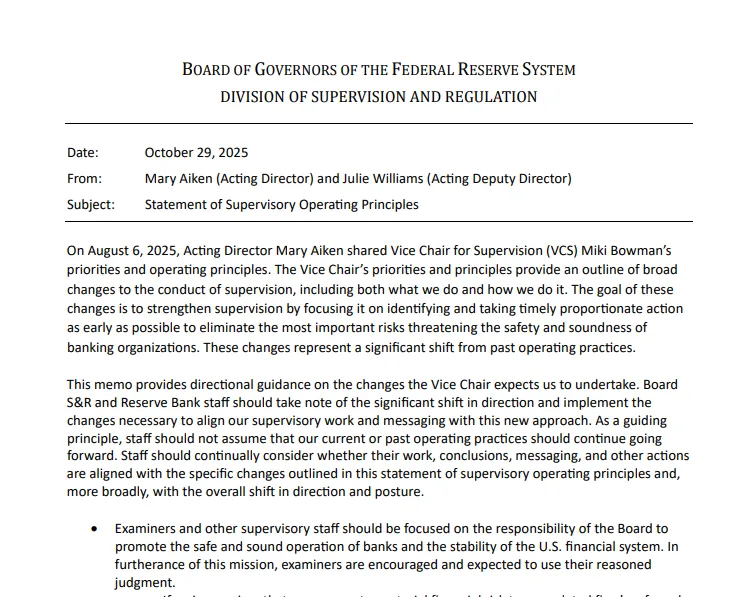

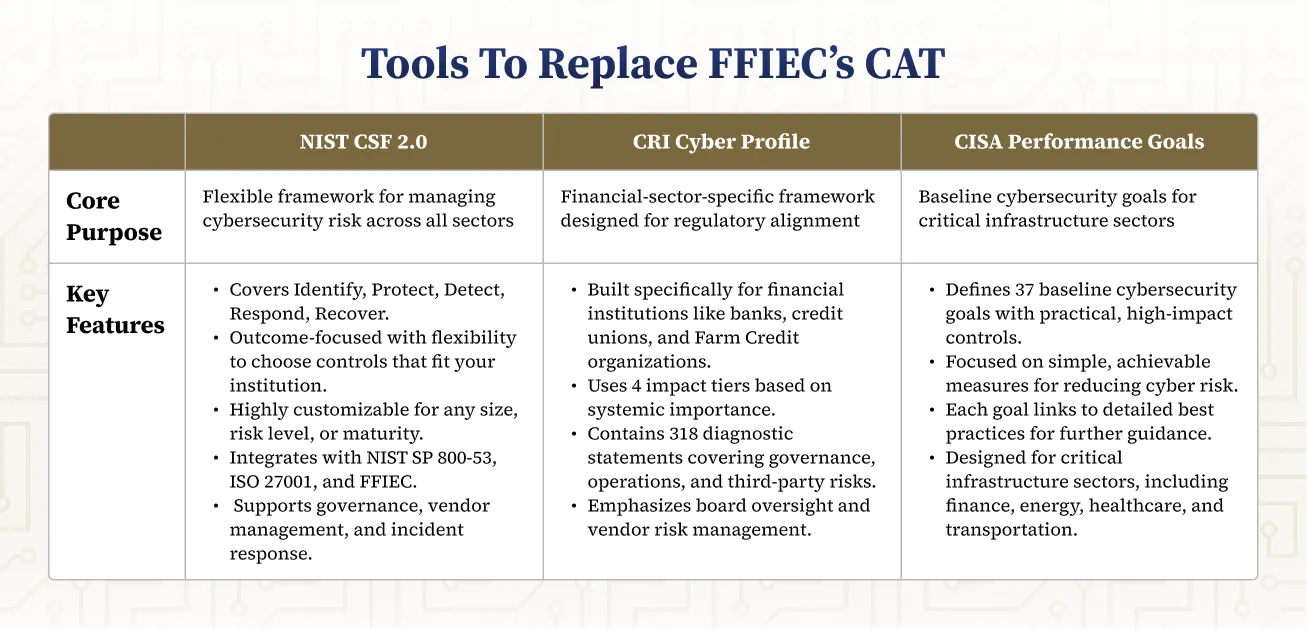

Stay Aligned with Regulations

Track evolving AI-related laws and guidelines, such as the EU AI Act and local financial regulations. Ensure that AI models are explainable and compliant with regulatory expectations.

Engage Stakeholders and Build Adaptability

Transparent communication with customers, regulators, and partners builds trust and supports compliance. Create flexible frameworks that can evolve with technology and regulatory changes, and encourage innovation while managing risk.

Ensure Data Privacy and Security

Prohibit/discourage employee use of public AI services (e.g., cloud services) for business purposes, as their search and query activity may be monitored and saved. License tools and applications for secure in-house use of these services, if they are relevant for business functions.

Monitor Internal and External Environments

Continue to assess the effects of AI on the institution’s internal and external environments. Even if the institution is not directly using AI for internal purposes, consideration should be given to future opportunities. In addition, the external environment should be assessed on an ongoing basis for changes in potential threats and risks that warrant attention.

AI as Both Opportunity and Challenge

AI is reshaping the financial industry, offering new ways to enhance efficiency, improve customer experience, and manage risk. At the same time, it introduces complex threats that require new approaches to governance, oversight, and compliance. The most successful institutions will be those that embrace AI’s potential while proactively managing its risks.

For a detailed exploration of AI-driven fraud, regulatory advisories, and actionable steps for financial institutions, visit our BSA Coalition AI and Fraud Webinar Insights.

NETBankAudit has developed an Artificial Intelligence risk assessment methodology for community financial institutions which can assist your institution in evaluating its current risk posture and identifying related opportunities and threats. Please contact us for additional information.

.avif)

.svg)

.webp)

.webp)

.webp)

.png)

.webp)

.webp)

.webp)

.webp)

%201.webp)

.webp)

%20(3).webp)

.webp)

%20Works.webp)

.webp)

.webp)

%20(1).webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

%201.svg)